The two Kubernetes controllers for AWS NLB

You googled for some documentation on Kubernetes LB services annotations like service.beta.kubernetes.io/aws-load-balancer-type but you are still unsure which values you can put there? Well, you reached the right place!

The different Kubernetes controllers for AWS

There are two main Kubernetes controllers available to manage AWS Load Balancers instances:

-

the legacy Kubernetes "Cloud Controller Manager"

-

the "AWS Load Balancer Controller"

The legacy controller

The first one has its codebase in the Kubernetes repository (a.k.a. the "in-tree" cloud controller). It is being deprecated and moving to a new external repo.

🍃 out-of-tree

The latter requires a few extra steps to be enabled. See the documentation link.

The new AWS Load Balancer controller

Formerly known as the ALB ingress controller, it was renamed to AWS Load Balancer controller and comes with added functionality and features such as:

-

Network Load Balancers (NLB) for Kubernetes services

-

Share ALBs with multiple Kubernetes ingress rules

-

New TargetGroupBinding custom resource

-

Support for fully private clusters

So, it can be used as a controller for managing NLB instances and also as an Ingress controller (if you do like using Ingress Custom Resources).

In this blog post, I will demo the new AWS Load Balancer controller for NLBs.

For that, we will use this special annotation service.beta.kubernetes.io/aws-load-balancer-type: "external" and also we will deploy the AWS LB controller that will allow for many more options on NLBs.

EKS cluster setup

If you already have an EKS cluster running, skip this part to the next section.

I’m using k8s 1.21 and spinning up a cluster with eksctl. It has several advantages, among which:

-

it simplifies the cluster creation

-

it tags the subnets as required by the AWS LB

The command I used:

eksctl create cluster \

--name ${CLUSTER_NAME} \

--version 1.21 \

--region ${REGION} \

--nodegroup-name linux-nodes \

--nodes 3 \

--nodes-min 2 \

--nodes-max 4 \

--with-oidc \

--spot \

--managedOf course, other friendly options include using Terraform.

Deploying a simple workload

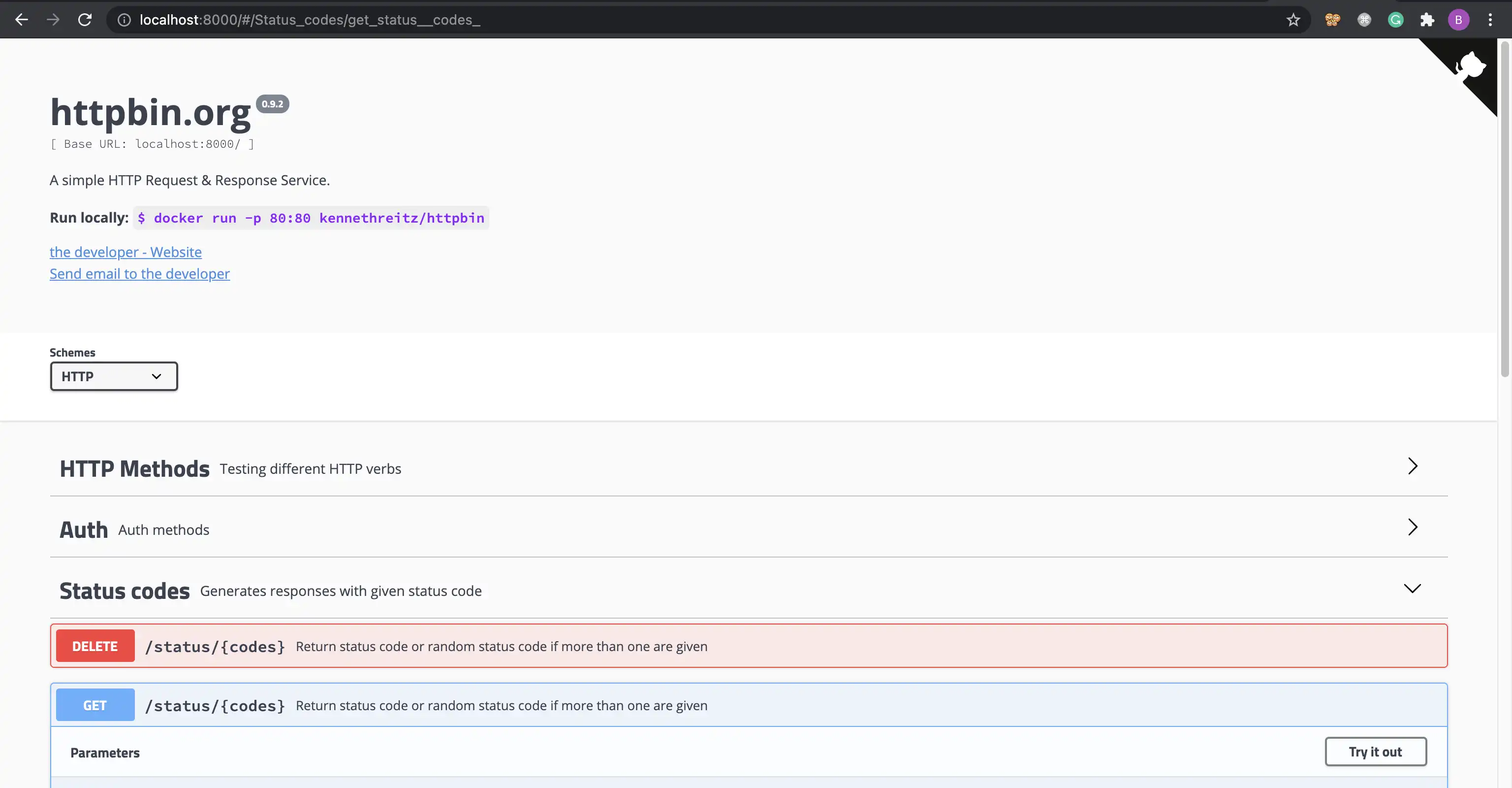

Let’s deploy a simple HTTPBIN backend server:

kubectl apply -f -<<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: httpbin

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: httpbin

version: v1

template:

metadata:

labels:

app: httpbin

version: v1

spec:

containers:

- image: docker.io/kennethreitz/httpbin

imagePullPolicy: IfNotPresent

name: httpbin

env:

- name: GUNICORN_CMD_ARGS

value: "--capture-output --error-logfile - --access-logfile - --access-logformat '%(h)s %(t)s %(r)s %(s)s Host: %({Host}i)s}'"

ports:

- containerPort: 80

EOFVisit the home page:

kubetl port-forward deploy/httpbin 8000:80 &

open http://localhost:8000We will use this /status/200 path as a health check endpoint for the Load Balancer.

[LEGACY] Kubernetes in-tree controller for AWS NLB

It’s definitely not the purpose of this article, but it’s always good to know where we come from. So, let’s have a quick look at the in-tree controller for AWS ELBs.

CLB

Before trying the NLB out and just out of curiosity, let’s create a basic Service with type: LoadBalancer, without any extra annotations, and see what happens on the AWS side:

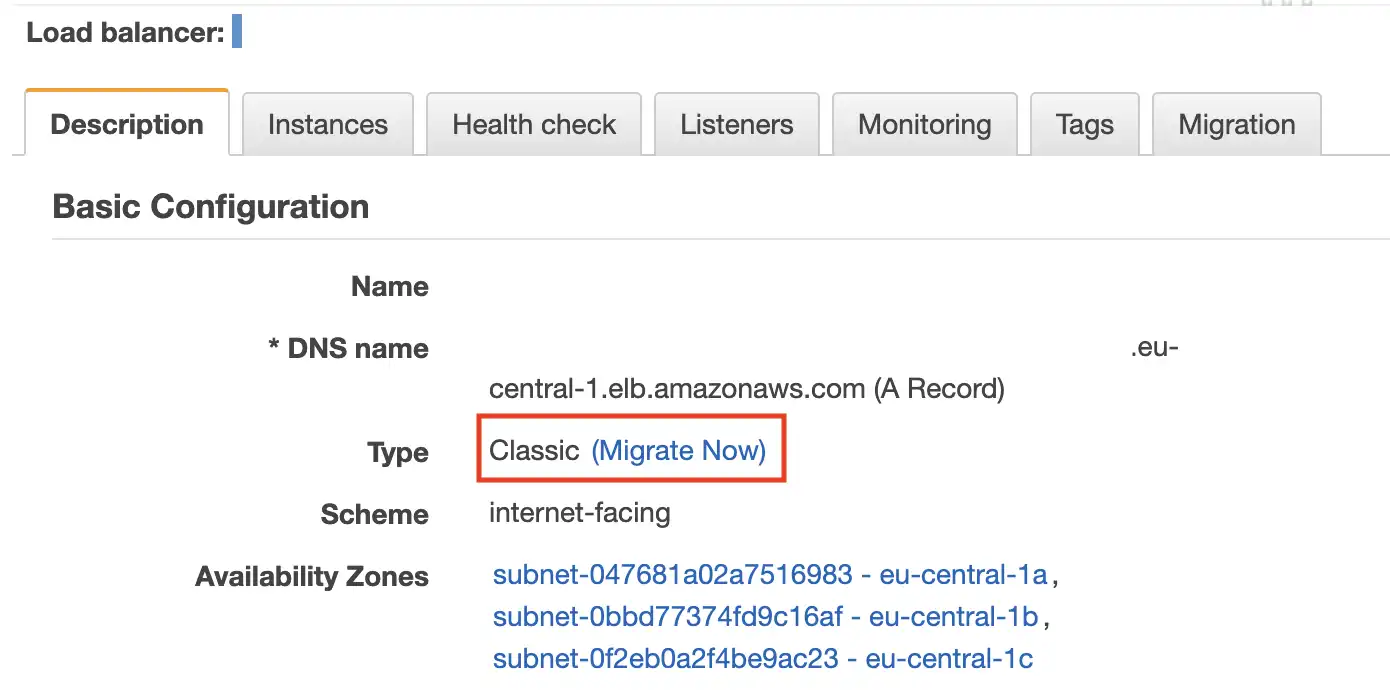

kubectl expose deploy httpbin --type LoadBalancer --port 8000 --target-port=80The result is a Classic ELB (CLB) instance. AWS will encourage you to migrate to an NLB instance type:

Basic health check config:

Quick test:

domain=$(kubectl get svc httpbin -o yaml -o jsonpath='{.status.loadBalancer.ingress[0].hostname}')

curl ${domain}:8000/headers{

"headers": {

"Accept": "*/*",

"Host": "...eu-central-1.elb.amazonaws.com:8000",

"User-Agent": "curl/7.64.1"

}

}This is the simplest way of getting an AWS LB up & running. Let’s start over with an NLB.

Clean this up:

kubectl delete svc httpbinNLB

Here is a simple configuration using annotations picked from the documentation:

apiVersion: v1

kind: Service

metadata:

name: httpbin

namespace: default

annotations:

service.beta.kubernetes.io/aws-load-balancer-type: "nlb"

spec:

ports:

- port: 8000

protocol: TCP

targetPort: 80

selector:

app: httpbin

version: v1

type: LoadBalancerBut as soon as you start adding more annotations that were first designed to manage CLB instances, it will begin to fail to create the NLB instance. Examples:

service.beta.kubernetes.io/aws-load-balancer-access-log-enabled: "true"

service.beta.kubernetes.io/aws-load-balancer-access-log-emit-interval: "5"

service.beta.kubernetes.io/aws-load-balancer-access-log-s3-bucket-name: ${BUCKET_NAME}

service.beta.kubernetes.io/aws-load-balancer-access-log-s3-bucket-prefix: "Aug31"

service.beta.kubernetes.io/aws-load-balancer-healthcheck-interval: "5"Plus, for some reason, it will take up to 5 minutes for the LB to validate the health checks targets (using TCP) and thus much time for the LB to be ready and accepting downstream connections.

You need something more customizable!

Welcome the new AWS LB Controller! 🎉

As explained in the intro, this is an additional controller you have to install on your EKS cluster. You will find quick-start instructions in the next section.

Don’t forget to delete this NLB instance:

kubectl delete svc httpbin

# double check the EC2 target group has been deleted too...[NEW] Deploying the AWS Load Balancer controller

So the AWS Load Balancer Controller is now the recommended way of working with NLBs.

Here is a quick-start guide, mainly inspired by the official documentation. The first step is to give our cluster a Service Account with enough rights to create & configure NLB instances.

One requirement is to have an IAM OIDC provider bound to your cluster. For more information, follow the guide here.

Here is a snippet describing the IAM binding process:

export CLUSTER_NAME="my-cluster"

export REGION="eu-central-1"

export AWS_ACCOUNT_ID=XXXXXXXXXX

export IAM_POLICY_NAME=AWSLoadBalancerControllerIAMPolicy

export IAM_SA=aws-load-balancer-controller

# Setup IAM OIDC provider for a cluster to enable IAM roles for pods

eksctl utils associate-iam-oidc-provider \

--region ${REGION} \

--cluster ${CLUSTER_NAME} \

--approve

# Fetch the IAM policy required for our Service-Account

curl -o iam-policy.json https://raw.githubusercontent.com/kubernetes-sigs/aws-load-balancer-controller/v2.2.4/docs/install/iam_policy.json

# Create the IAM policy

aws iam create-policy \

--policy-name ${IAM_POLICY_NAME} \

--policy-document file://iam-policy.json

# Create the k8s Service Account

eksctl create iamserviceaccount \

--cluster=${CLUSTER_NAME} \

--namespace=kube-system \

--name=${IAM_SA} \

--attach-policy-arn=arn:aws:iam::${AWS_ACCOUNT_ID}:policy/${IAM_POLICY_NAME} \

--override-existing-serviceaccounts \

--approve \

--region ${REGION}

# Check out the new SA in your cluster for the AWS LB controller

kubectl -n kube-system get sa aws-load-balancer-controller -o yamlThis last command should output something similar to:

apiVersion: v1

kind: ServiceAccount

metadata:

annotations:

eks.amazonaws.com/role-arn: arn:aws:iam::<AWS ACCOUNT>:role/eksctl-<CLUSTER NAME>-addon-iamserviceaccou-Role1-LP7RKD47QPSJFH

labels:

app.kubernetes.io/managed-by: eksctl

...

name: aws-load-balancer-controller

namespace: kube-system

secrets:

- name: aws-load-balancer-controller-token-p8qvrThe final step is to deploy the AWS Load Balancer Controller:

kubectl apply -k "github.com/aws/eks-charts/stable/aws-load-balancer-controller/crds?ref=master"

helm repo add eks https://aws.github.io/eks-charts

helm repo update

helm install aws-load-balancer-controller eks/aws-load-balancer-controller \

-n kube-system \

--set clusterName=${CLUSTER_NAME} \

--set serviceAccount.create=false \

--set serviceAccount.name=${IAM_SA}Check everything is running smoothly:

kubectl -n kube-system get poExpected output:

NAME READY STATUS RESTARTS AGE

aws-load-balancer-controller-847c9d5885-ssn8q 1/1 Running 0 11s

aws-load-balancer-controller-847c9d5885-v9qwq 1/1 Running 0 11s

aws-node-bxmqr 1/1 Running 0 4h2m

aws-node-p6cm2 1/1 Running 0 4h2m

aws-node-rn47z 1/1 Running 0 4h2m

coredns-745979c988-dd92b 1/1 Running 0 4h15m

coredns-745979c988-gwr2w 1/1 Running 0 4h15m

kube-proxy-n82bc 1/1 Running 0 4h2m

kube-proxy-tlfcd 1/1 Running 0 4h2m

kube-proxy-vn7jg 1/1 Running 0 4h2mUsing the AWS Load Balancer Controller

First, let’s tell the Kubernetes in-tree cloud-controller not to process the Service. The AWS Load Balancer Controller will now handle this. The switch is this annotation: service.beta.kubernetes.io/aws-load-balancer-type

Also, we want the NLB to be publically visible (by default, it is an internal NLB), and we want it to be in instance mode (helps with client IP preservation).

Change from:

service.beta.kubernetes.io/aws-load-balancer-type: "nlb"to

# use the AWS LB Controller

service.beta.kubernetes.io/aws-load-balancer-type: "external"

# we want an internet-facing NLB

service.beta.kubernetes.io/aws-load-balancer-scheme: internet-facing

# use target groups in instance mode

service.beta.kubernetes.io/aws-load-balancer-nlb-target-type: "instance"Access logs

You need to create a new S3 bucket with the required AWS permissions:

BUCKET_NAME=baptiste-access-logs-nlb-demo

aws s3 mb s3://${BUCKET_NAME}

aws s3api put-public-access-block --bucket ${BUCKET_NAME} --public-access-block-configuration BlockPublicAcls=true,IgnorePublicAcls=true,BlockPublicPolicy=true,RestrictPublicBuckets=true

cat <<EOF > bucket-policy.json

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "AWSLogDeliveryWrite",

"Effect": "Allow",

"Principal": {

"Service": "delivery.logs.amazonaws.com"

},

"Action": "s3:PutObject",

"Resource": "arn:aws:s3:::${BUCKET_NAME}/*",

"Condition": {

"StringEquals": {

"s3:x-amz-acl": "bucket-owner-full-control"

}

}

},

{

"Sid": "AWSLogDeliveryAclCheck",

"Effect": "Allow",

"Principal": {

"Service": "delivery.logs.amazonaws.com"

},

"Action": "s3:GetBucketAcl",

"Resource": "arn:aws:s3:::${BUCKET_NAME}"

}

]

}

EOF

aws s3api put-bucket-policy --bucket ${BUCKET_NAME} --policy file://bucket-policy.jsonEnable access logs:

# Annotations for access logs - will be soon deprecated in favor of "aws-load-balancer-attributes"

service.beta.kubernetes.io/aws-load-balancer-access-log-enabled: "true"

service.beta.kubernetes.io/aws-load-balancer-access-log-s3-bucket-name: "${BUCKET_NAME}"

service.beta.kubernetes.io/aws-load-balancer-access-log-s3-bucket-prefix: "my-httpbin-app"

# LB attributes - you can concatenate values separated with comma signs ','

service.beta.kubernetes.io/aws-load-balancer-attributes: "load_balancing.cross_zone.enabled=false"Backend config

# Backend procotol - change to SSL if you are doing the TLS offloading upstream

service.beta.kubernetes.io/aws-load-balancer-backend-protocol: "http" # https://github.com/kubernetes/kubernetes/blob/master/staging/src/k8s.io/legacy-cloud-providers/aws/aws.go#L182

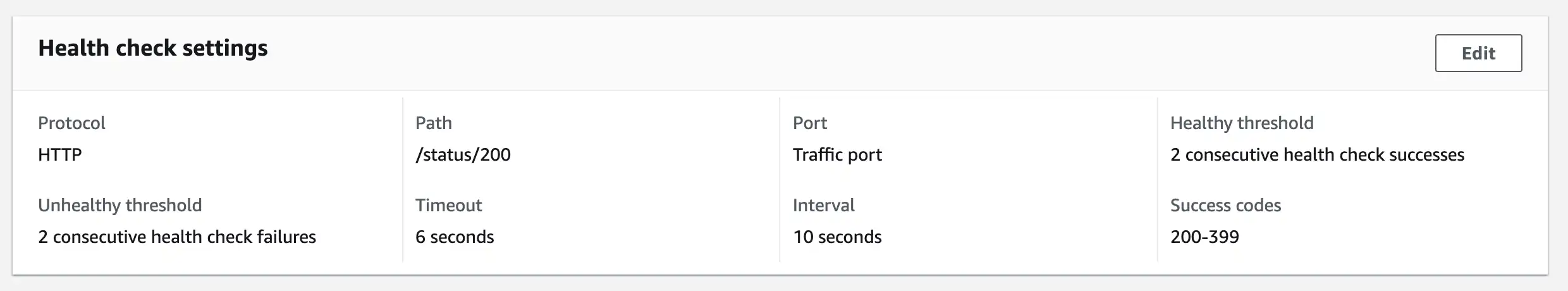

# service.beta.kubernetes.io/aws-load-balancer-ssl-ports: "https" # https://github.com/kubernetes/kubernetes/blob/master/staging/src/k8s.io/legacy-cloud-providers/aws/aws.go#L168Health checks

Enable health checks:

service.beta.kubernetes.io/aws-load-balancer-healthcheck-healthy-threshold: "2" # https://github.com/kubernetes/kubernetes/blob/master/staging/src/k8s.io/legacy-cloud-providers/aws/aws.go#L209 2 to 20

service.beta.kubernetes.io/aws-load-balancer-healthcheck-unhealthy-threshold: "2" # 2-10

service.beta.kubernetes.io/aws-load-balancer-healthcheck-interval: "10" # 10 or 30

service.beta.kubernetes.io/aws-load-balancer-healthcheck-path: "/status/200"

service.beta.kubernetes.io/aws-load-balancer-healthcheck-protocol: "HTTP"

service.beta.kubernetes.io/aws-load-balancer-healthcheck-port: "traffic-port"

service.beta.kubernetes.io/aws-load-balancer-healthcheck-timeout: "6" # 6 is the minimumMore annotations

More annotations can be found here

ALL-IN-ONE

Let’s gather all these annotations:

apiVersion: v1

kind: Service

metadata:

name: httpbin

namespace: default

annotations:

# NLB

service.beta.kubernetes.io/aws-load-balancer-type: "external"

service.beta.kubernetes.io/aws-load-balancer-scheme: internet-facing

service.beta.kubernetes.io/aws-load-balancer-nlb-target-type: "instance"

# Backend

service.beta.kubernetes.io/aws-load-balancer-backend-protocol: "http" # https://github.com/kubernetes/kubernetes/blob/master/staging/src/k8s.io/legacy-cloud-providers/aws/aws.go#L182

# Access logs

service.beta.kubernetes.io/aws-load-balancer-access-log-enabled: "true"

service.beta.kubernetes.io/aws-load-balancer-access-log-s3-bucket-name: "${BUCKET_NAME}"

service.beta.kubernetes.io/aws-load-balancer-access-log-s3-bucket-prefix: "my-httpbin-app"

# service.beta.kubernetes.io/aws-load-balancer-access-log-emit-interval: "5" # not yet implemented

# LB attributes - you can concatenate values separated with comma signs ','

service.beta.kubernetes.io/aws-load-balancer-attributes: "load_balancing.cross_zone.enabled=false"

# Health checks

service.beta.kubernetes.io/aws-load-balancer-healthcheck-healthy-threshold: "2" # https://github.com/kubernetes/kubernetes/blob/master/staging/src/k8s.io/legacy-cloud-providers/aws/aws.go#L209 2 to 20

service.beta.kubernetes.io/aws-load-balancer-healthcheck-unhealthy-threshold: "2" # 2-10

service.beta.kubernetes.io/aws-load-balancer-healthcheck-interval: "10" # 10 or 30

service.beta.kubernetes.io/aws-load-balancer-healthcheck-path: "/status/200"

service.beta.kubernetes.io/aws-load-balancer-healthcheck-protocol: "HTTP"

service.beta.kubernetes.io/aws-load-balancer-healthcheck-port: "traffic-port"

service.beta.kubernetes.io/aws-load-balancer-healthcheck-timeout: "6" # 6 is the minimum

spec:

ports:

- port: 8000

protocol: TCP

targetPort: 80

selector:

app: httpbin

version: v1

type: LoadBalancerWhen annotations are bigger that the rest of the Custom Resource 😬

And just after a few seconds:

The access logs are well configured:

As well as the health checks settings which are correctly reflected:

Thanks to these HC, the targets are quickly available:

Closing words

Honestly, I found the information pretty scattered across AWS doc, AWS blog entries, Kubernetes doc, third-party websites, etc. I hope I was able to shed some light on these Kubernetes-to-NLB mechanics.

Nothing outstanding here, but it’s good when you see annotations doing their job! Plus, having these HTTP health checks controllable from annotations - for a Network Load Balancer - is not something you will find on every Cloud provider.

Cleanup

# NLB

kubectl delete svc httpbin

# HTTPBIN

kubectl delete -f httpbin.yaml

# AWS LB controller

helm delete aws-load-balancer-controller -n kube-system

# IAM SA

eksctl delete iamserviceaccount \

--cluster=${CLUSTER_NAME} \

--namespace=kube-system \

--name=${IAM_SA} \

--region ${REGION}

# S3 bucket

BUCKET_NAME=baptiste-access-logs-nlb-demo

aws s3 rm s3://${BUCKET_NAME} --recursive

aws s3 rb s3://${BUCKET_NAME}