Serverless API with Google Cloud - Re-building the Medium claps button

In this article, I will offer my Hugo blog a Medium-style applause button and also build its backend, as a simple API, using some Serverless magic.

For the backend, I’m using Google Cloud services. In particular, Cloud Functions for the code, Firestore as a document database and Memorystore as a low latency key-value store.

So this post will be about consuming these services through their APIs, with Javascript & also Go lang. Also I will explain how I tried to secure my API running on Cloud Functions.

How it started

Having my blog online, generated by Hugo and running on GCS, I was looking for a way to get some feedback from my audience. Analytics are great but don’t measure appreciation.

So, by googling a bit, I found a similar initiative with an animated Claps button. From there, I mainly picked the reference to the codepen.io script:

So, that would become my use-case - and excuse - for building a small app relying on the Google Cloud serverless capabilities.

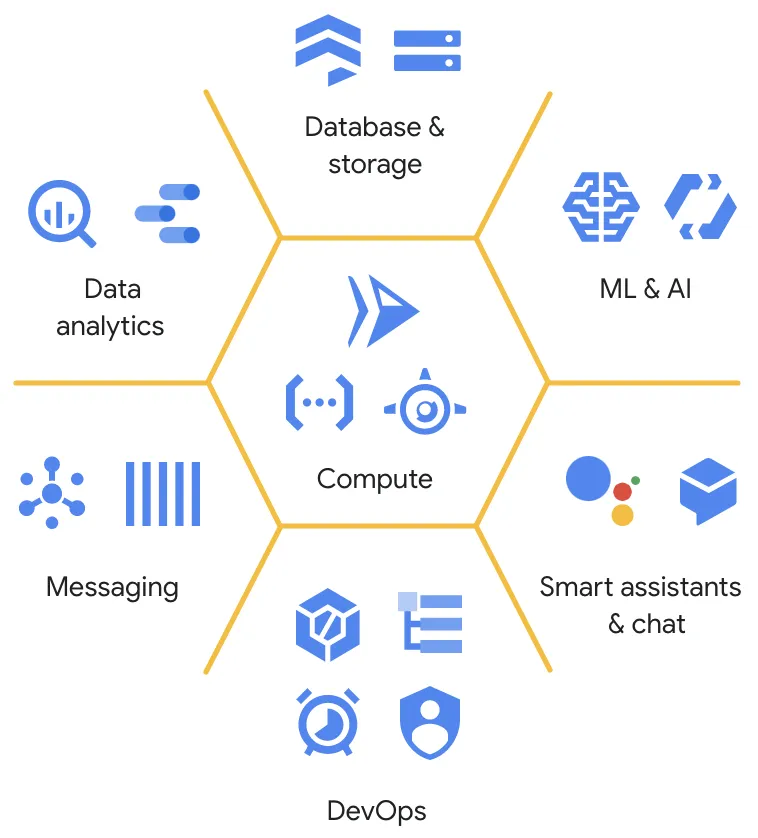

Exploring GCP’s serverless services

Yep, serverless is not just about "Functions" or "Lambdas". It’s more about managed applications and runtimes, automatically scaling, and paying for what you use.

Here are the current services in the Google’s serverless family:

I don’t even know all of them, but I picked those ones:

-

Functions, for sure, to run the code

-

Firestore as a 'claps' count storage facility

And also Memorystore as a managed Redis cluster. Memorystore can also come on top of Memcached. So, Memorystore is not part of the Serverless family but is like sitting in between the classical VM pricing plan and a Serverless pricing plan. Actually, you pay a few "micro-dollar" per GB per second. Okay, it’s like $1.20 every day for the smallest instance, which offers 3GB of memory.

A whole new world, or two… or three!

Before diving into the code, I realized that I had to catch-up with some new technologies, to me 😄

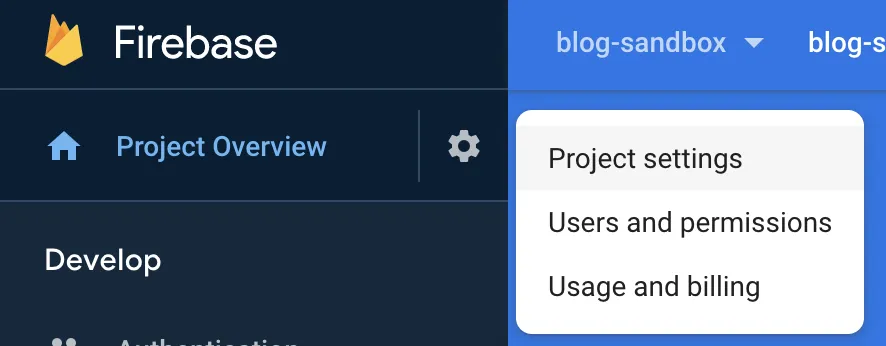

For instance, Firestore is part of the Firebase platform and it looks like you cannot use it if you don’t have a Firebase account. What the heck is Firebase when you’ve never developed any Mobile app?! From my readings, I memorized that "Firestore is a joint product between the Google Cloud and Firebase teams".

Also, Cloud Functions only support a few runtimes, namely Node.js >= 8, Python 3.7 and Go >= 1.11. Well, coming from Java, I had to have a few lessons first.

Finally, frontend. Oh dear! What happened since jQuery back in 2010? I read things like "web components", "typescript", "yarn", "promises", "fetch API", whoa!! Plus, not speaking about ReactJS or VueJS HTML components. So I decided to take the easy path with the codepen.io snippet.

Complaints done, let’s move on and hope that this post will help you to get started quicker than me!

NB: did I speak about Go modules? ok ok… later!

Setting up my DEV environment

First, setup some local runtimes:

# GO

$> brew install go

...

$> go help gopath # MUST read

# Node.JS

$> brew install nvm

$> cat >> ~/.zshrc << EOF

export NVM_DIR="$HOME/.nvm"

[ -s "/usr/local/opt/nvm/nvm.sh" ] && . "/usr/local/opt/nvm/nvm.sh" # This loads nvm

[ -s "/usr/local/opt/nvm/etc/bash_completion.d/nvm" ] && . "/usr/local/opt/nvm/etc/bash_completion.d/nvm"

EOF

$> nvm install --lts

Installing latest LTS version.

Downloading and installing node v12.16.2...

Downloading https://nodejs.org/dist/v12.16.2/node-v12.16.2-darwin-x64.tar.xz...

##################################################################################################################################### 100.0%

Computing checksum with shasum -a 256

Checksums matched!

Now using node v12.16.2 (npm v6.14.4)

Creating default alias: default -> lts/* (-> v12.16.2)

# now using Node v12.16.2. If necessary, revert to macOS system version:

$> nvm ls

...

$> nvm use system

v13.12.0Then, create a GCP account, install the Google Cloud SDK and configure your CLI:

gcloud auth login

gcloud config set project [PROJECT_ID]

gcloud config set compute/region [REGION] # europe-west1For more convenience, we will use local Redis & Firestore instances.

Local REDIS

Basically, it’s a one-liner:

docker run -d -p 6379:6379 --name redis-rate-limiter redisTadaa!

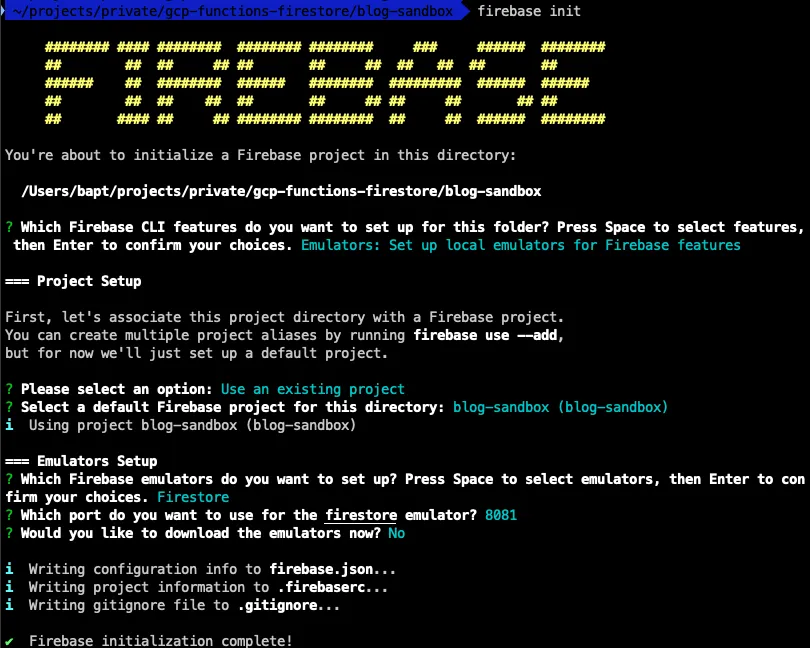

Local FIRESTORE

That one is available through the firebase-tools package.

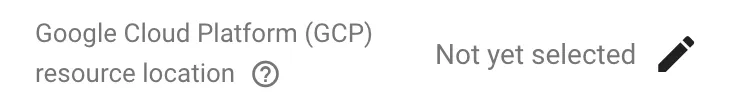

You may first need to create a project on https://console.firebase.google.com/ and to configure the resource location:

Install the CLI and select your project:

npm install -g firebase-tools

firebase login

firebase init # pick 'emulators', then 'Firestore'

Then, you can use the Firestore emulator:

firebase emulators:start --only firestoreI added a Makefile to run/restart those two daemons quickly. You can find it on this GitHub repo.

Well, you are all set. You can start programming now!

Scaffolding the solution

FRONTEND

For the claps button, I copied the code from the codepen.io snippet and that just worked as expected.

<div>

<button id="clap" class="clap">

<span>

<!-- SVG Created by Luis Durazo from the Noun Project -->

<svg id="clap--icon" xmlns="http://www.w3.org/2000/svg" viewBox="-549 338 100.1 125">

<path d="M-471.2 366.8c1.2 1.1 1.9 2.6 2.3 4.1.4-.3.8-.5 1.2-.7 1-1.9.7-4.3-1-5.9-2-1.9-5.2-1.9-7.2.1l-.2.2c1.8.1 3.6.9 4.9 2.2zm-28.8 14c.4.9.7 1.9.8 3.1l16.5-16.9c.6-.6 1.4-1.1 2.1-1.5 1-1.9.7-4.4-.9-6-2-1.9-5.2-1.9-7.2.1l-15.5 15.9c2.3 2.2 3.1 3 4.2 5.3zm-38.9 39.7c-.1-8.9 3.2-17.2 9.4-23.6l18.6-19c.7-2 .5-4.1-.1-5.3-.8-1.8-1.3-2.3-3.6-4.5l-20.9 21.4c-10.6 10.8-11.2 27.6-2.3 39.3-.6-2.6-1-5.4-1.1-8.3z"/>

<path d="M-527.2 399.1l20.9-21.4c2.2 2.2 2.7 2.6 3.5 4.5.8 1.8 1 5.4-1.6 8l-11.8 12.2c-.5.5-.4 1.2 0 1.7.5.5 1.2.5 1.7 0l34-35c1.9-2 5.2-2.1 7.2-.1 2 1.9 2 5.2.1 7.2l-24.7 25.3c-.5.5-.4 1.2 0 1.7.5.5 1.2.5 1.7 0l28.5-29.3c2-2 5.2-2 7.1-.1 2 1.9 2 5.1.1 7.1l-28.5 29.3c-.5.5-.4 1.2 0 1.7.5.5 1.2.4 1.7 0l24.7-25.3c1.9-2 5.1-2.1 7.1-.1 2 1.9 2 5.2.1 7.2l-24.7 25.3c-.5.5-.4 1.2 0 1.7.5.5 1.2.5 1.7 0l14.6-15c2-2 5.2-2 7.2-.1 2 2 2.1 5.2.1 7.2l-27.6 28.4c-11.6 11.9-30.6 12.2-42.5.6-12-11.7-12.2-30.8-.6-42.7m18.1-48.4l-.7 4.9-2.2-4.4m7.6.9l-3.7 3.4 1.2-4.8m5.5 4.7l-4.8 1.6 3.1-3.9"/>

</svg>

</span>

<span id="clap--count" class="clap--count"></span>

<span id="clap--count-total" class="clap--count-total"></span>

</button>

</div>The Javascript part comes with callback functions, ready to be integrated to your website.

🐥 Pretty basic but that’s enough. So as you can see, only 2 API_URI endpoints are consumed by this snippet: one with GET, the other one with POST.

BACKEND - Functions Frameworks

Cloud Functions come with an optional Framework. Its purpose is to give your code some portability across:

-

Cloud Functions

-

your development machine

-

Cloud Run

-

Knative-based environments

So, that sounded right enough to me to get started with it. As of writing, the Function Framework is available for Node.js and Go lang.

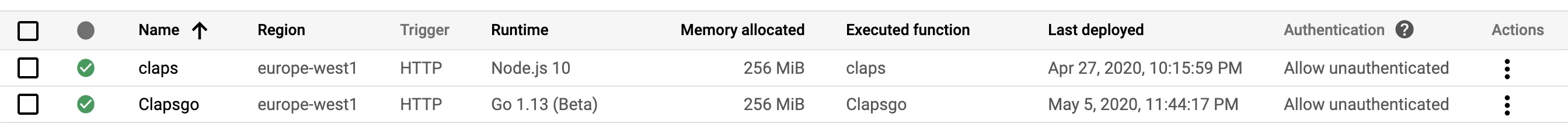

Hereunder, you’ll find two versions of my Claps API, with very similar implementations. One with Node.JS, the second one with GO lang.

BACKEND - The NODE.JS version

Nothing much to say about the code. I could take benefit of the Express web framework for routing and applying some middleware.

The dependency management in Node.JS is simple and searching for libs on npmjs.org makes things quick & easy.

Also, I switched from npm to yarn because that latter looked more robust, fast and secured.

BACKEND - the GO LANG version

The start was a bit tricky because I could not find a web framework similar to Express.js. I even asked to contributors of the Go Function Framework for some support and it sounds like they are thinking about it.

Meanwhile, I’m using this trick to create a secondary HTTP multiplexer (http.ServeMux).

Alternative GO endpoint: https://europe-west1-personal-218506.cloudfunctions.net/Clapsgo

Connecting to FIRESTORE

The connection is technically done over gRPC and is wrapped by the firebase dependencies.

Connecting to MEMORYSTORE

Here is another piece of surprise. It’s about connecting our Google Function to a managed GCP service. It’s a good practice to not expose your Redis to the Internet. So you need internal routing.

Luckily, GCP’s got your back. Thanks to the "VPC Access Connector", you can plug Google Functions to any other VPC you own or to Google managed services, like Memorystore.

Create a VPC

gcloud compute networks create serverless-vpcCreate a VPC Access Connector

gcloud compute networks vpc-access connectors create serverless-connector --network serverless-vpc --range 10.8.0.0/28Grant access rights to the Service Account used by the Function

gcloud projects add-iam-policy-binding personal-218506 --member serviceAccount:personal-218506@appspot.gserviceaccount.com --role roles/compute.viewer

gcloud projects add-iam-policy-binding personal-218506 --member serviceAccount:personal-218506@appspot.gserviceaccount.com --role roles/compute.networkUserAdd a Redis Memorystore

gcloud redis instances create rate-limiting-redis --network serverless-vpc

gcloud redis instances listGet the private IP

gcloud redis instances describe rate-limiting-redis --format='value(host)'Deployment

This is the last step where you will bind your function to the VPC Access Connector.

Simple deployment:

gcloud functions deploy ${FUNCTION} \

--entry-point ${FUNCTION} \

--region ${GCP_REGION} \

--runtime nodejs10 \

--trigger-http \

--timeout 10 \

--memory 256MB \

--allow-unauthenticated \

--vpc-connector projects/${PROJECT_ID}/locations/${GCP_REGION}/connectors/serverless-connector \

--set-env-vars PROJECT_ID=${PROJECT_ID} \

--set-env-vars REDIS_HOST=${REDIS_INTERNAL_IP} \

--set-env-vars ...Alright, everything is up and running on GCP! 👌

Securing the Functions

Well, let’s make things a bit more serious. As you can see, my functions are publicly exposed on the Internet.

I would like to avoid denial of service and to mitigate any consequences of attacks.

So, there are a few things I can set up, like validating the Referer header used by my business-logic, enforcing CORS usage, limiting the number of requests (POST and GET) on a per IP basis and rate-limiting HTTP requests, per IP as well.

Actually, all of these security policies would better fit in an API Manager solution, as a transversal configuration to my APIs. Indeed, I think it’s a better approach to have a central place for managing authorizations, analytics, plans, API naming convention & so much more! But I’m just exploring Google Functions capabilities on their own 😄

INPUT VALIDATION

The only input I get from my client is the Referer header. So I pass it through a simple Regular Expression matcher and that’s it.

Of course, it’s easy to bypass with a programmatic HTTP client (CLI or lib). Here is the GO version:

func validReferrer(request *http.Request) (bool, string) {

referrer := request.Header.Get("Referer")

return referrerRegexp.MatchString(referrer), referrer

}CORS

Easy to bypass as well, I’m just slowing down the attack speed. Below is the javascript version, with a middleware function passed to Express:

// CORS

var corsOptions = {

origin: function (origin, callback) {

if (CORS_WHITELIST.indexOf(origin) !== -1) {

callback(null, true)

} else {

callback(null, false)

}

}

}

app.use(cors(corsOptions));REQUEST LIMITING

That’s again a simple one but effective though. Here is the Go version:

ipCount, prs := ipCountPostMap[ip]

if prs && ipCount > MAX_POST_PER_IP {

writer.WriteHeader(http.StatusTooManyRequests)

return

} else {

if prs {

ipCountPostMap[ip] = ipCount + 1

} else {

ipCountPostMap[ip] = 1

}

}RATE-LIMITING

Here comes some throttling! First, the javascript version with the redis-rate-limiter lib:

var redisMiddleware = rateLimiter.middleware({

redis: redisClient,

key: function (req) {

return req.headers['x-forwarded-for']

},

rate: '10/second'

});

app.use(redisMiddleware);| For the Go implementation, I used a GCRA based rate-limiting library, throttled/throttled. Some explanations are given a bit further. |

SECURED ENDPOINTS (OAUTH2)

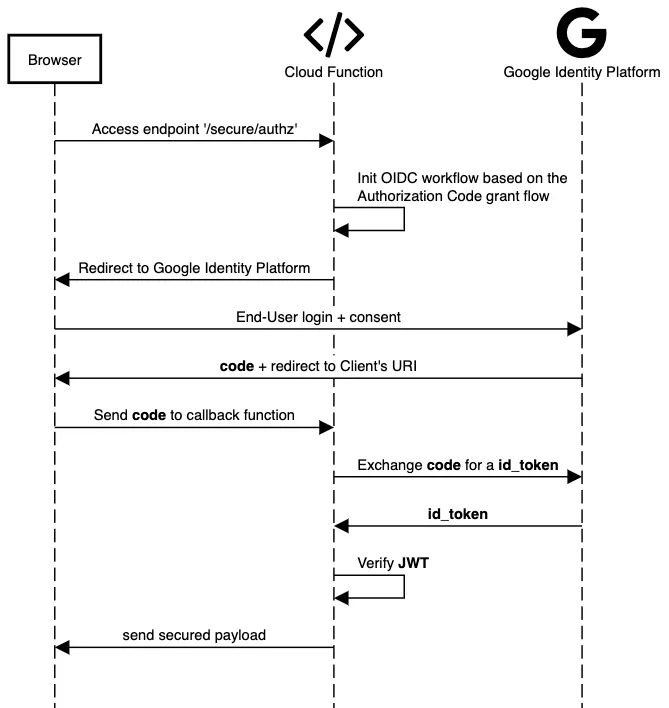

Finally, I wanted to see if I was able to protect a particular endpoint with OAuth, only accessible to me.

I just looked for the Google’s OpenID Connect endpoint (see my article about OIDC) and used adequate libraries to consume it.

So, the workflow is pictured right here:

The function authorizes the access only if:

-

the JWT issuer is

https://accounts.google.com -

the JWT was signed by Google (asymmetric encryption) - using the Google’s JWKS

-

the

emailclaim represents my personal email address

It’s a bare minimum, although it’s also advised to check for the aud and exp claims validity. I’m not checking the token expiration date because, in my implementation, I’m asking for a new token every time the endpoint is called (not reusing tokens).

In practice, I’m using the openid scope so that my Client gets an id_token, and the email scope so that the email claim is present.

With Go, it looks like there are 2 different official packages dealing with Google Authorization servers: golang.org/x/oauth2/google and google.golang.org/api/oauth2/v2. The latter looks to be more recent.

Complementary discussion about rate-limiting

After a few research, I found out 5 ways of doing some kind of rate-limiting.

It all depends on how much you are willing to pay for that.

-

apply some middleware on your function(s) and connect it to a Redis instance (Memorystore, VM as a container, Kubernetes, etc.) ✅

-

reverse proxy rate-limiting (HAproxy, Nginx, Apache httpd, etc.)

-

API Manager rate-limiting (Apigee, Gravitee API Manager, etc.)

-

the tricky way: abuse the Google Cloud Function lifecycle and use Global scope variables

-

the WTF way: define a custom quota for Cloud Functions usage on GCP IAM Quotas (apply globally)

Picking a solution

If I were to implement a rate-limiting solution in real enterprise context, I would go for the solution #3, based on a API Manager, for sure! Because it’s great to have a central place for enforcing security rules and, as I said earlier, for many more reasons!

Option #2 would fit in a more traditional IT, where there is not yet a API governance in place.

Solutions numbered #4 and #5 are just not serious hacks and I hope you will never have to do that! 😀

The GCRA algorithm

In my example, the Node.js lib uses a basic rate-limiting implementation. Once you have reached the limit, you have to wait for the next period to end. If I were to use a larger time span, like say one hour, then a malicious user could reach the limit within a few seconds and then be blocked for the next 59 minutes.

Hopefully, there are some better rate-limiting algorithms, like the "GCRA". It’s like an improved leaky bucket algorithm. Maybe you don’t want to read this great article, so let me picture the "leaky bucket" algorithm for you. Imagine you fill a bucket with some water. The bucket has a leak so that some amount of water is escaping at a constant rate. Once the bucket is full, you just have to wait for a few seconds before filling it again with small drops of water. Well, you got it. That’s a more permissive rate-limiting approach but the downside is that it depends on a third-party "dripping" process that might not be fast enough. The GCRA correct this with a time-centric approach and maths.

Quick bench

Here we are, "What will happen when I’ll monkey-click that button?"

One shot test:

# Go

curl -X GET https://europe-west1-personal-218506.cloudfunctions.net/Clapsgo -H "Referer: https://baptistout.net/posts/openldap-helm-chart/" -H "Origin: https://baptistout.net"

0

# Node.js

curl -X GET https://europe-west1-personal-218506.cloudfunctions.net/claps -H "Referer: https://baptistout.net/posts/openldap-helm-chart/" -H "Origin: https://baptistout.net"

0Okay, we are ready to go! For my bench, I’ll use a small HTTP load generator tool, called hey.

Bench parameters: 20 requests, triggered by 2 concurrent clients, with a limit of 5 req/sec

GO

$> hey -n 20 -c 2 -q 5 -m GET -H "Referer: https://baptistout.net/posts/openldap-helm-chart/" -H "Origin: https://baptistout.net" https://europe-west1-personal-218506.cloudfunctions.net/claps

Summary:

Total: 4.1113 secs

Slowest: 2.3083 secs

Fastest: 0.0754 secs

Average: 0.2425 secs

Requests/sec: 4.8646

Total data: 20 bytes

Size/request: 1 bytes

Response time histogram:

0.075 [1] |■■

0.299 [18] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

0.522 [0] |

0.745 [0] |

0.969 [0] |

1.192 [0] |

1.415 [0] |

1.638 [0] |

1.862 [0] |

2.085 [0] |

2.308 [1] |■■

Latency distribution:

10% in 0.0939 secs

25% in 0.1065 secs

50% in 0.1243 secs

75% in 0.1678 secs

90% in 0.2528 secs

95% in 2.3083 secs

0% in 0.0000 secs

Details (average, fastest, slowest):

DNS+dialup: 0.0049 secs, 0.0754 secs, 2.3083 secs

DNS-lookup: 0.0002 secs, 0.0000 secs, 0.0021 secs

req write: 0.0000 secs, 0.0000 secs, 0.0001 secs

resp wait: 0.2374 secs, 0.0752 secs, 2.2592 secs

resp read: 0.0001 secs, 0.0000 secs, 0.0002 secs

Status code distribution:

[200] 20 responsesNode.js

$> hey -n 20 -c 2 -q 5 -m GET -H "Referer: https://baptistout.net/posts/openldap-helm-chart/" -H "Origin: https://baptistout.net" https://europe-west1-personal-218506.cloudfunctions.net/claps

Summary:

Total: 2.0929 secs

Slowest: 0.1837 secs

Fastest: 0.0374 secs

Average: 0.0887 secs

Requests/sec: 9.5561

Total data: 18 bytes

Size/request: 0 bytes

Response time histogram:

0.037 [1] |■■■■

0.052 [1] |■■■■

0.067 [1] |■■■■

0.081 [4] |■■■■■■■■■■■■■■■■■■

0.096 [9] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

0.111 [2] |■■■■■■■■■

0.125 [0] |

0.140 [0] |

0.154 [1] |■■■■

0.169 [0] |

0.184 [1] |■■■■

Latency distribution:

10% in 0.0644 secs

25% in 0.0789 secs

50% in 0.0884 secs

75% in 0.0932 secs

90% in 0.1493 secs

95% in 0.1837 secs

0% in 0.0000 secs

Details (average, fastest, slowest):

DNS+dialup: 0.0064 secs, 0.0374 secs, 0.1837 secs

DNS-lookup: 0.0003 secs, 0.0000 secs, 0.0035 secs

req write: 0.0000 secs, 0.0000 secs, 0.0001 secs

resp wait: 0.0821 secs, 0.0372 secs, 0.1194 secs

resp read: 0.0001 secs, 0.0000 secs, 0.0003 secs

Status code distribution:

[200] 18 responses

[429] 2 responsesEven if I hit the quota for 2 requests out of 20, the response time distribution is amazing!

Same test with the POST method:

# Go

Latency distribution:

10% in 0.0901 secs

25% in 0.1136 secs

50% in 0.1365 secs

75% in 0.1679 secs

90% in 2.6269 secs

95% in 3.5242 secs

0% in 0.0000 secs

# Node.js

Latency distribution:

10% in 0.1120 secs

25% in 0.1414 secs

50% in 0.1609 secs

75% in 0.1794 secs

90% in 0.2004 secs

95% in 0.2861 secs

0% in 0.0000 secsAmong all of my scenarii, the warm up time looks to be a bit longer with Go.

But when running a long-distance bench, Go got slightly better results with latency distribution, if we look at numbers for the 25 and 50 percentiles.

Anyhow, both perform very well!

Lessons learned

With no special importance in the order:

-

Frontend development has become very mature and complex

-

Go and Node.js are both mature and supported by big communities. I would say that the learning curve is a bit steeper with Go but the huge number of ready-to-use packages make it as pleasant to use as Node.js

-

One would love to be able to apply transversal configuration to several functions at once; but yeah, that’s definitely the purpose of API Managers that Google is addressing with Apigee

What I loved: the possibility for companies to easily add an API on top of their legacy IT: just plug a VPN from your premises to GCP, and Serverless capabilities are at your disposal (through the VPC Access Connectors)!

Leads

Using the Cloud Function Framework gave me one more reason to investigate the Knative stack. I’ll try to focus on that within the next weeks.

By the way, it would be also interesting to have a look at OpenFaaS.

If I were to use Google Functions at work, I would head to an infra-as-code style for deployment and version control, such as serverless, pulumi or terraform.

Closing words

Thank you for reading, I hope you learned something new.

And without any further ado, here is the button!