How Kubelet actually runs containers

You may wonder what’s the difference between Docker, containerd and runc.

The answer is not straightforward. This blog post describes interactions and roles of each of these components.

Let the show begin!

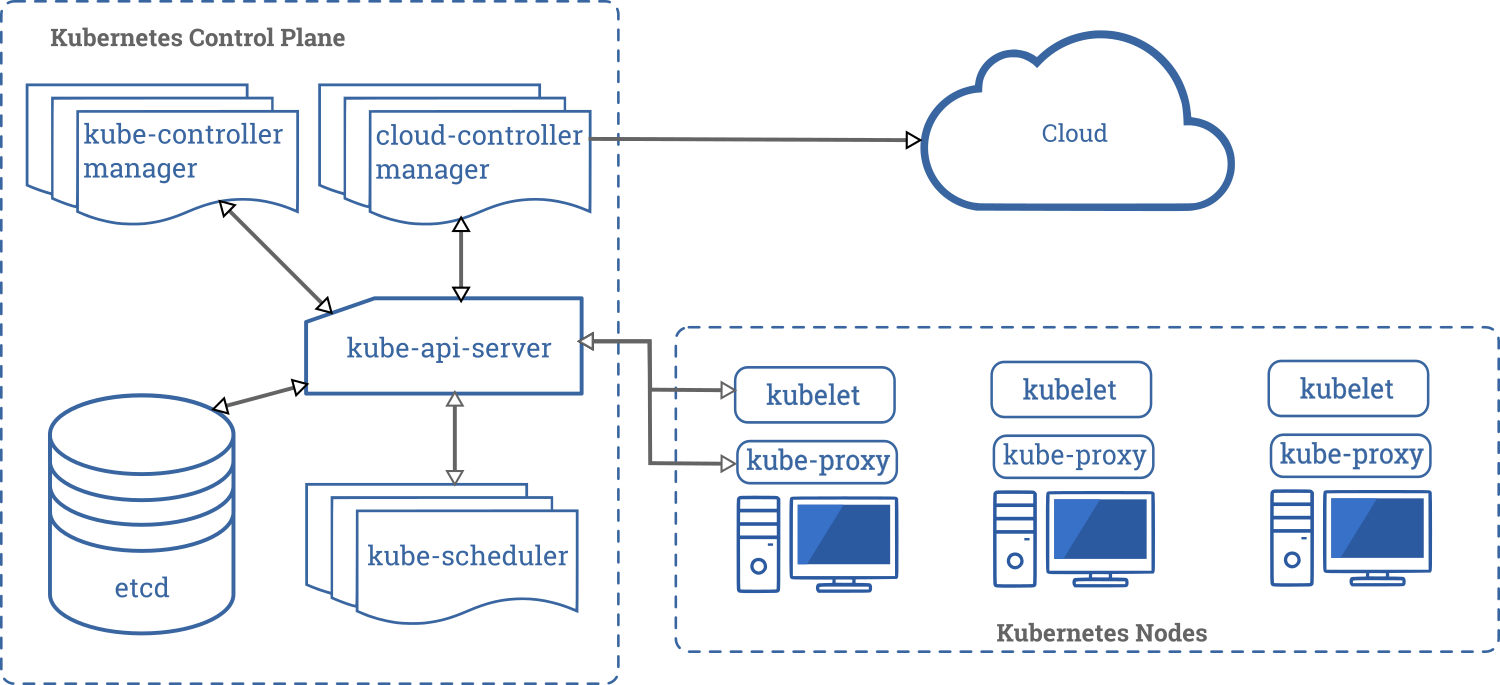

Well, here is how the Kubernetes architecture looks like on a high-level:

Once pods get assigned on worker nodes by the kube-scheduler, the kubelet is in charge of starting the containers.

Actually, there are a few more layers between the kubelet and a running container.

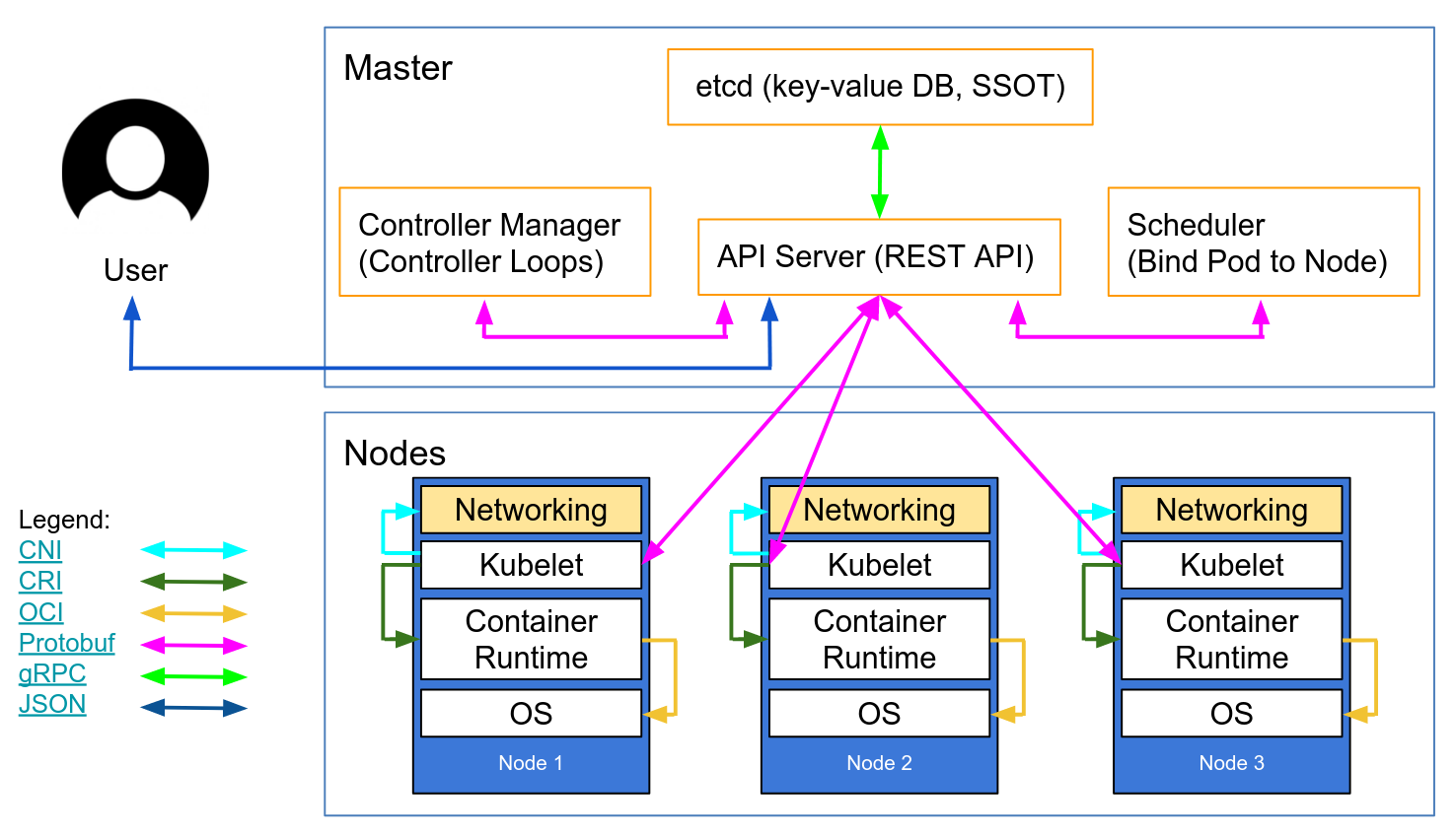

Have a look at that other schema, particularly at the components running on a node:

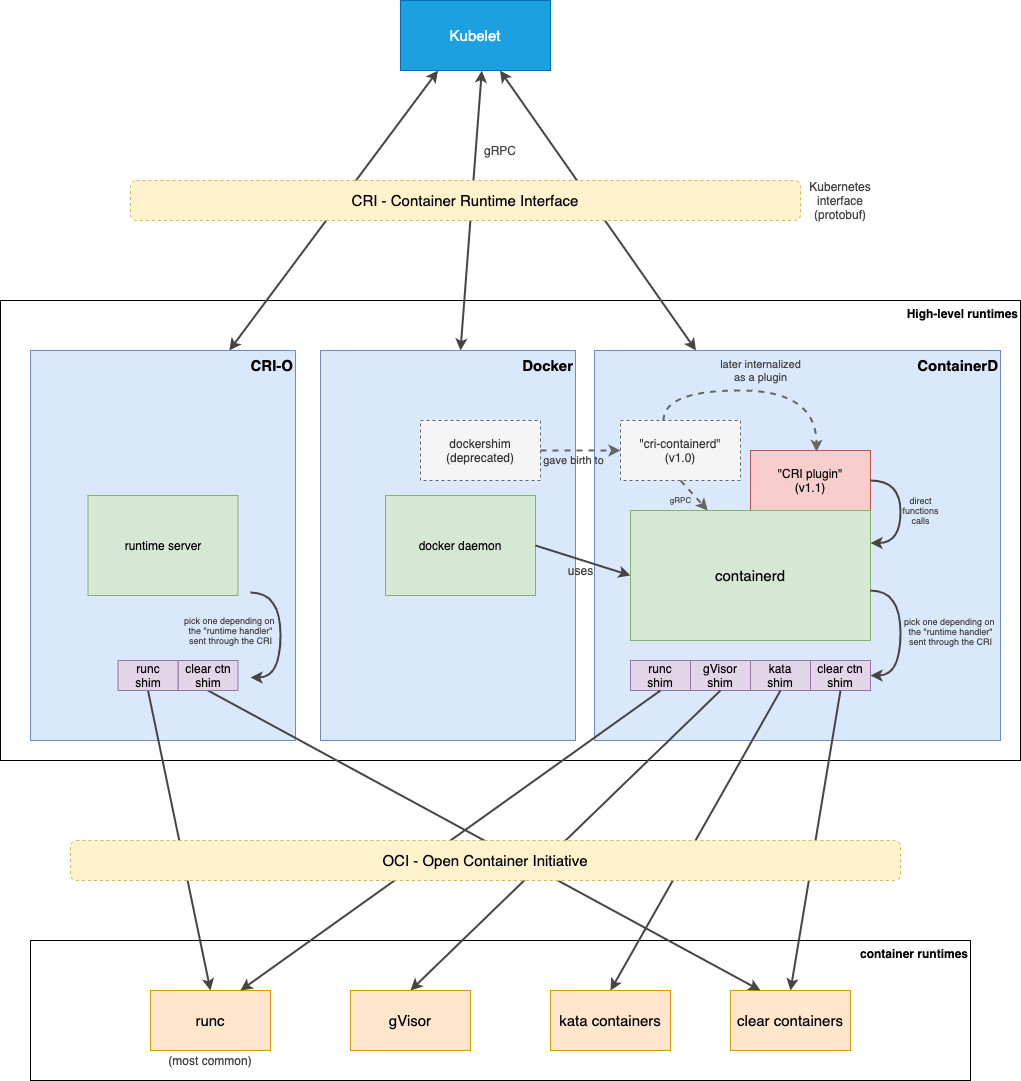

You will understand that the kubelet talks to container runtimes over the Container Runtime Interface (CRI) protocol.

Deep dive

CRI

The CRI is an interface that must be implemented by every "high-level" runtime willing to manage containers. This can be Docker but also Containerd or CRI-O.

This interface is defined with the protobuf dialect and communications are done through gRPC between the Kubelet and these container runtime managers.

The big picture

This diagram is my output from lot of blog entries, articles and project’s README’s.

Maybe it’s still a bit wrong but, at least, you can easily locate all these components at a glance.

As you can see, the Kubelet talks to "high-level runtimes" through the CRI. These runtimes talk to "lower-level container runtimes" like runc, thanks to small programs called shims.

These shims permit to containers to have their own process lifecycle, without depending on higher-level runtimes. That’s a good thing if containers can run when your docker daemon has to restart!

Low-level runtimes

Containers run with their own PID 1, isolated from the OS, thanks to cgroups and the different namespaces created for them by the lower-level runtimes.

Plus, when using gVisor, containers are running within a new user space kernel. Don’t ask me too much about that but it just sounds good when you care about security at low-level. Quote: "[user spaces] … are providing additional protection from host kernel vulnerabilities".

Finally, low-level runtimes take care of running the command described in the container manifest. Like bash -c sleep 1800

High-level runtimes

Back to things we know, CRI runtimes.

If Docker was the only one a few years ago, it was also managing too many things. Like the image build, image sync (pull / push) to registries, controlling the storage for the container layers, the network and much more!

So the Kubernetes community responded by creating this CRI layer and Docker played the game by donating its container daemon to the community, containerd.

Docker is still alive within and out of the Kubernetes world. Docker uses the containerd runtime itself.

Another "kubernetes-native" high-level runtime appeared a few times ago, named CRI-O. The goal is to lower the number of layers between the Kubelet and the container itself.

Finally, there is another CRI runtime called Frakti, not present in the schema, but it looks like this one is dedicated to run containers in dedicated VMs, by using the Kata-container "low-level" container runtime. Security first!

Stop using the docker CLI

Sometimes, it’s useful to run some docker ps commands when you want to "debug" running containers, for example out-of-sync with your kubelet.

You’d better using crictl. This CLI is compatible with CRI runtimes and options are very similar to the ones used in docker. It’s better because this tool is aware of the notions of "pods" and "namespaces". So you won’t loose time looking for a given pod (in a given namespace).

Hope this helped and long live to containers!